Robotics and Interactive Systems Engineering (RISE) Lab

The Robotics and Interactive Systems Engineering (RISE) Laboratory focuses in the design and development of Human-Machine Collaborative Systems (HMCS) and the study of human-machine haptics. We design and develop robotic systems that amplify and/or assist human physical capabilities when performing tasks that require learned skills, judgement, and precision. The systems seek to combine human judgement and experience with physical and sensory augmentation using advanced display and robotic devices to improve the overall performance. In haptic research, we are interested in understanding the human interaction with the physical (or virtual) world through their senses of touch. Our research aims at exploring the aspects of human dynamics and psychophysics that affect the human-machine interaction, such as force control and perception, and are essential to the advancement of future human-machine systems. The application of the research extends to many areas including haptic (force and/or tactile feedback) interface design, development of simulation and training platform for various applications, and human factors.

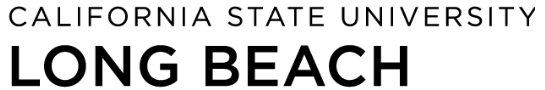

Immersive virtual reality with CAVE VR system

The establishment of the CAVE VR lab was supported by a NSF MRI grant (Award # 1626655) to acquire a virtual environment (Visbox VisCube M4 CAVE system) that facilitates interdisciplinary research in human-machine interaction. The room-size system includes a four-wall projection system, eight-camera real-time motion capture system, and a control software suite that allows customized software development and additional hardware integration. Flight control joystick and tactile feedback units, have been integrated to the CAVE system to enhance its capabilities for research in human-automation teaming and multi-modal feedback displays. The current project involves development and testing of Urban Air Mobility (UAM) concepts of operation.

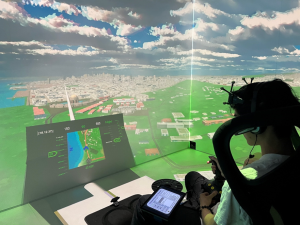

Gait and hand rehabilitation

The research focuses on the application of multi-modal feedback (visual, haptic, and/or audio) system for assessment and development of training tools for rehabilitation. Different hardware and user interfaces have been developed, including an embedded system for gait asymmetry detection and training for post-stroke patients, and a system with visual and tactile feedback for hand function assessment and training for individuals with hand impairment. The work involves collaboration with Electrical Engineering and Physical Therapy faculty.

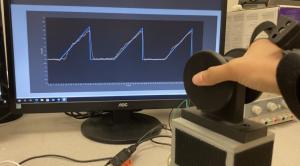

User interface design for NASA NextGen cockpit display

3D Volumetric Cockpit Situation Display (CSD) was developed by NASA as part of the Cockpit Display of Traffic Information (CDTI) program. As intended to be used in the cockpit, the advanced features of the CSD, such as 2D-to-3D manipulation and real time path planning, place a limit on the use of traditional input devices. The research explores new design criteria for the next generation of human input device that can incorporate 3D information display with multi-modal feedback modalities for real time flight planning. This collaborative research was conducted under the NASA URC - Center for Human factors in Advanced Aeronautics and Technologies (CHAAT) at CSULB.

Selected publications:

- J. Cheung, M. Schmitz, T. Strybel, P. Marayong, V. Battiste, S. Ahuja. K. Vu, & P. Shankar, “Development of Android-based messaging application for onboard communication for UAM simulation”, AHFE International Conference (December, 2024).

- T. Strybel, V. Battiste, K. Vu, P. Marayong, S. Ahuja, M. Schmitz, J. Cheung, C. Culver, A. Alfaroarevalo & P. Shankar, “Evaluation of voice vs. text communication modes in simulated UAM operations”, In: Alex, er M. Yemelyanov and Lisa Jo Elliott (eds) Neuroergonomics and Cognitive Engineering. AHFE International Conference (July, 2024). AHFE Open Access, vol 126. AHFE International, USA.

- K. Haneji, K. Leung, A. Tran, J. Cheung, W. Deabaapilux, P. Marayong, K.L. Vu, P. Shankar, T.Z. Strybel, V. Battiste, “Use of tactile alerts in urban air mobility vehicles”, In: Gesa Praetorius, Charlott Sellberg and Riccardo Patriarca (eds) Human Factors in Transportation. International Conference on Applied Human Factors and Ergonomics. AHFE Open Access, vol 95, July 2023.

- K. A. Young, P. Marayong, & K-P. L. Vu, “Faculty mentor training to change mentoring practices at a diverse R2 university”, Journal on Excellence in College Teaching. 33(4), 105-132, 2022.

- A. Taing, S. T. Nguyen-Rodriguez, N. Rayyes, P. Marayong, and P. Buonora, “Student Perceptions of Undergraduate Research-Infused Courses”, Understanding Interventions Journal, Vol. 13(1), Spring 2022.

- P. Shankar, P. Marayong, T. Strybel, V. Battiste, H. Nguyen*, J. Cheung, J. Viramontes, "Urban Air Mobility: Design of a Virtual Reality Testbed and Experiments for Human Factors Evaluation", ASME International Mechanical Engineering Congress and Exposition, 2022.

- T. Strybel, V. Battiste, P. Marayong, P. Shankar, J. Viramontes, H. Nguyen*, and J. Cheung, "Preliminary validation of a virtual UAM vehicle and simplified cockpit interface", 66th Human Factors and Ergonomics Society Annual Meeting, Vol. 66(1), 39-39, 2022.

- P. Marayong, P. Shankar, J. Wei, H. Nguyen, T.Z. Strybel, and V. Battiste, “Urban air mobility testbed using CAVE virtual reality environment”, IEEE Aerospace Conference, 2020

- P. Marayong, T.Z. Strybel, J. Robles, R. O’Connor, K. Vu, and V. Battiste, “Force feedback integration in NASA NextGen Cockpit Situation Display”, AIAA Journal of Air Transportation, Vol. 25, No. 1, pp. 17-25, 2017.

- I. Khoo, P. Marayong, V. Krishnan, M. Balagtas, O. Rojas, and K. Leyba, “Real-time biofeedback device for gait rehabilitation of post-stroke patients”, Springer Biomedical Engineering Letters, pp. 1-12, 2017. Article ID 10.1007/s13534-017-0036-1

- V. Krishnan, I. Khoo, P. Marayong, K. Demars, Q. Duong, and A. Matheson, “Post-stroke symmetrical gait rehabilitation: a pilot study,” Archives of Physical Medicine and Rehabilitation. (ACRM), October, 2015.

- E. Park, J. Robles, P. Sim, R. O’Connor, K. Monk, P. Marayong, T. Strybel, and K. Vu, “Development of haptic assistance for route assessment tool of NASA NextGen volumetric cockpit situation display”, International Conference on Human-Computer Interaction, pp. 163-172, 2013. (Best Paper Award in the Human Interface and the Management of Information Area)

- J. Robles, M. Sguerri, C. Rorie, K. Vu, T. Strybel, and P. Marayong, “Integration Framework for NASA NextGen Volumetric Cockpit Situation Display with Haptic Feedback”, IEEE International Conference on Robotics and Automation (ICRA), pp. 1033-1037, 2012.